A significant fraction of the money that people have paid for Generative AI has been for coding assistance. When I point to the positive uses of Generative AI, I invariably point to how GenAI serves as kind of valuable autocomplete for coding.

In that context, a new study from METR, an AI benchmarking nonprofit, is shocking.

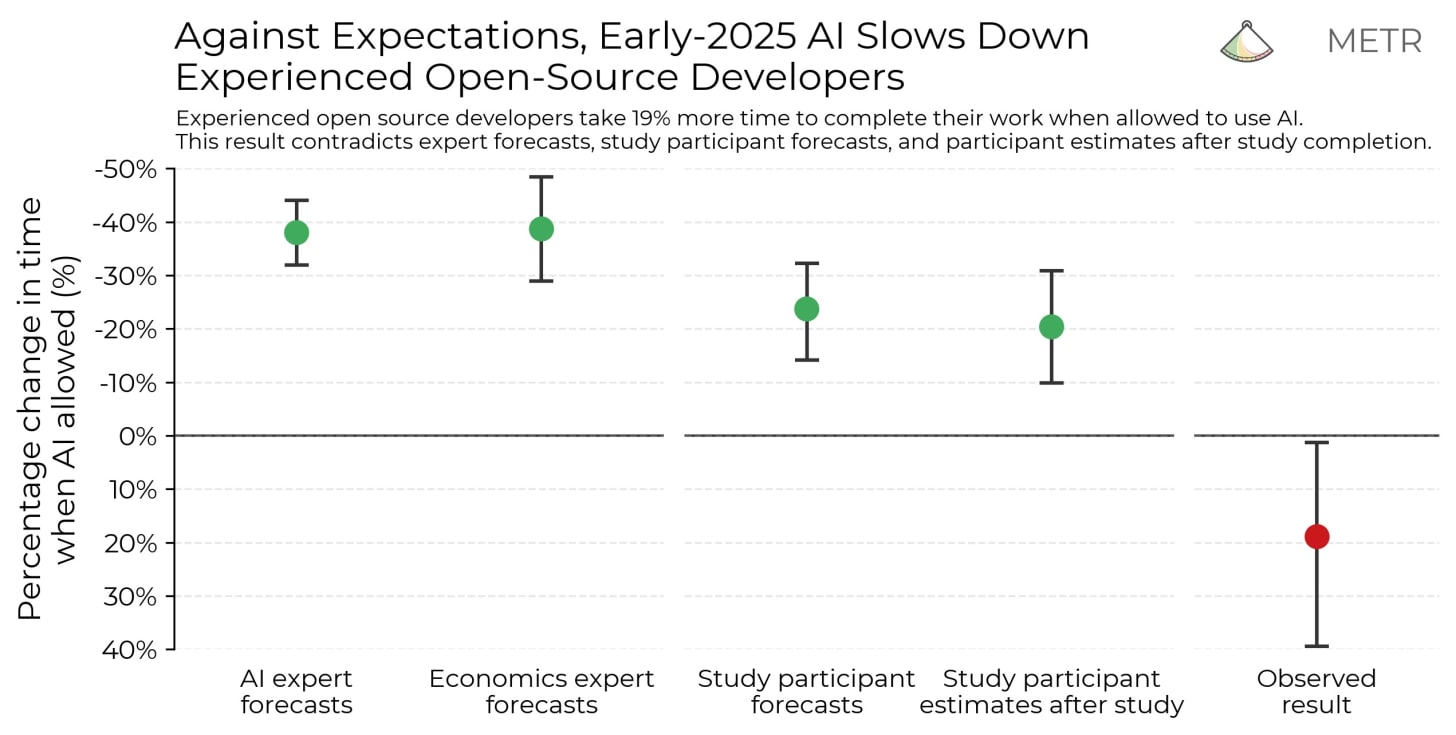

METR ran a randomized control trial in the first half of this year “to see whether AI tool usage at the February–June 2025 frontier (primarily Cursor Pro) sped-up experienced open-source developers.” According to an email they sent me, “Part of [the] motivation … was to understand how close we might be to automating the role of an AI lab research engineer.”

As they put it, “One of the often casually proposed methods for doing this is to just directly survey e.g. AI lab employees for anecdotes on how much they believe access to AI tools is speeding them up.” But anecdotal evidence is hardly trustworthy. METR did something a lot more hard-core, “Developers completed 245 tasks in mature projects on which they have an average of 4.9 years of prior experience.”

How much time did the coders save?

I will tell you in a moment. But, first, one of the most interesting parts of the study: METR asked the coders both beforehand and after the test about their expectations:

Ahead of completing the tasks developers pre-registered expectations that using AI tools would speed up overall task completion time by 24%. After task completion they estimated that task completion time was sped up by 20%. Machine learning and economics experts predicted even larger productivity gains

What actually happened?

Quoting METR, boldface theirs:

“current AI tools actually slowed down task completion time by 19%.”

IF this is a general, replicable finding, it’s a serious blow to generative AI’s flagship use case. People might be imagining productivity gains that they are not getting, and ignoring real-world costs, to boot.

§

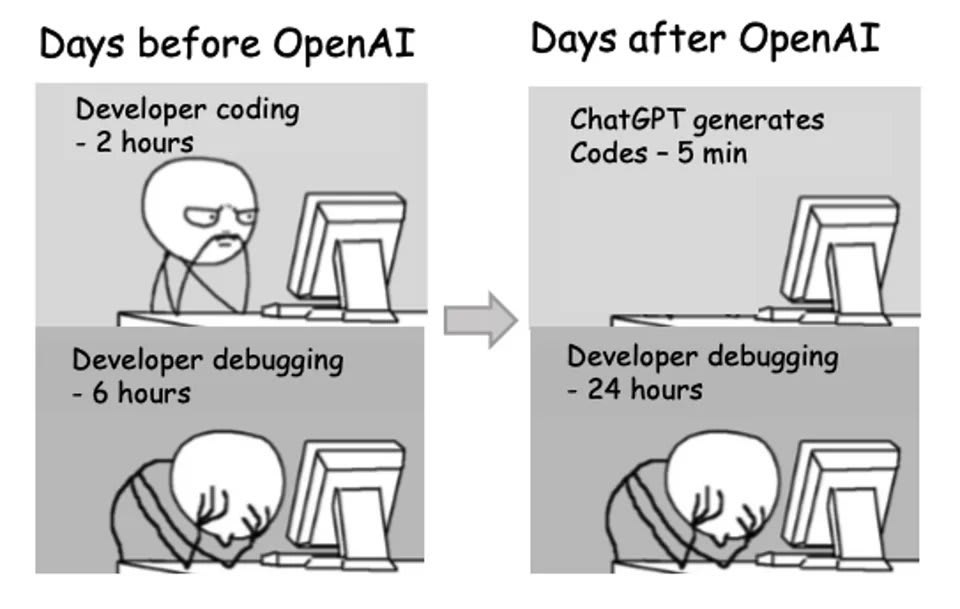

If it’s true, this 2023 cartoon (I couldn’t track down the artist) might give some insight into one underlying mechanism:

Debugging code you didn’t write can be hard.

§

As METR themselves notes there are many caveats:

… these are experienced developers working on large, complex codebases that, often, they helped build. We expect AI tools provide greater productivity benefits in other settings (e.g. on smaller projects, with less experienced developers, or with different quality standards). This study is importantly both a snapshot in time (early 2025) and on a unique (but important!) distribution of developers.

And they, perhaps, a bit more optimistic about the pace of AI progress than I, add “We don’t think our results represent a fundamental limitation in model capability, or rule out a rapid change in the metric being studied soon.”

Time will tell. But for now, the disconnnect between what coders thought they would get out of the tools efficiency-wise and what they actually did get out of them is cause for reevaluation.

It will be very interesting to see to how this evolves over time.

English (US) ·

English (US) ·