A rioter admitting he was "paid to be here". A National Guard soldier filming himself being bombarded by "balloons full of oil". A young man declaring his intention to "peacefully protest", before throwing a Molotov cocktail.

These are some things that are not happening on the streets of Los Angeles this week. But you may think they are if you’re getting your news from AI.

Fake AI-generated videos, photos, and factoids about the ongoing protests in LA are spreading like wildfire across social media, not least on Elon Musk's anything-goes social network X (formerly Twitter).

Made using freely available video and image generation software, these wholly synthetic chunks of outrage usually confirm some pre-existing narrative about the protests — such as the baseless idea that they are being covertly funded and equipped by mysterious outside factions.

And while some are technically labelled as parodies, many users miss these disclaimers and assume that the realistic-looking footage is an actual document of events on the ground.

"Hey everyone! Bob here on National Guard duty. Stick around, I'm giving you a behind the scenes look at how we prep our crowd control gear for today's gassing," says a simulated soldier in a viral TikTok video debunked by France24.

"Hey team!" he soon follows up. "Bob here, this is insane! They're chucking balloons full of oil at us, look!"

Another fake video posted on X features a male influencer wearing a too-clean T-shirt in the thick of a riot. "Why are you rioting?" he asks a masked man. "I don't know, I was paid to be here, and I just wanna destroy stuff," the man replies.

AI tries to fact-check AI

Meanwhile, chatbots such as OpenAI's ChatGPT and X's built-in Grok have been giving false answers to users' questions about events in the City of Angels.

Both Grok and ChatGPT wrongly insisted that photos of National Guard members crammed in together sleeping on the floor in LA this week "likely originated" in Afghanistan in 2021, according to CBS News.

It also reportedly claimed that a viral photo of bricks piled up on a pallet — which right-wing disinformation merchants had touted as proof of outside funding — was "likely" taken from the LA protests. In fact the photo showed a random street in New Jersey, but even when informed of the truth AI stuck to its guns.

AI-generated fakes are merely one new instrument in a long-established orchestra of disinformation. Photos recycled from past protests or events, photos taken out of context, and disguised video game footage — all commonplace among partisan outrage-peddlers since at least 2020 — are being shared widely, including by Republican senator Ted Cruz (who has form in this regard).

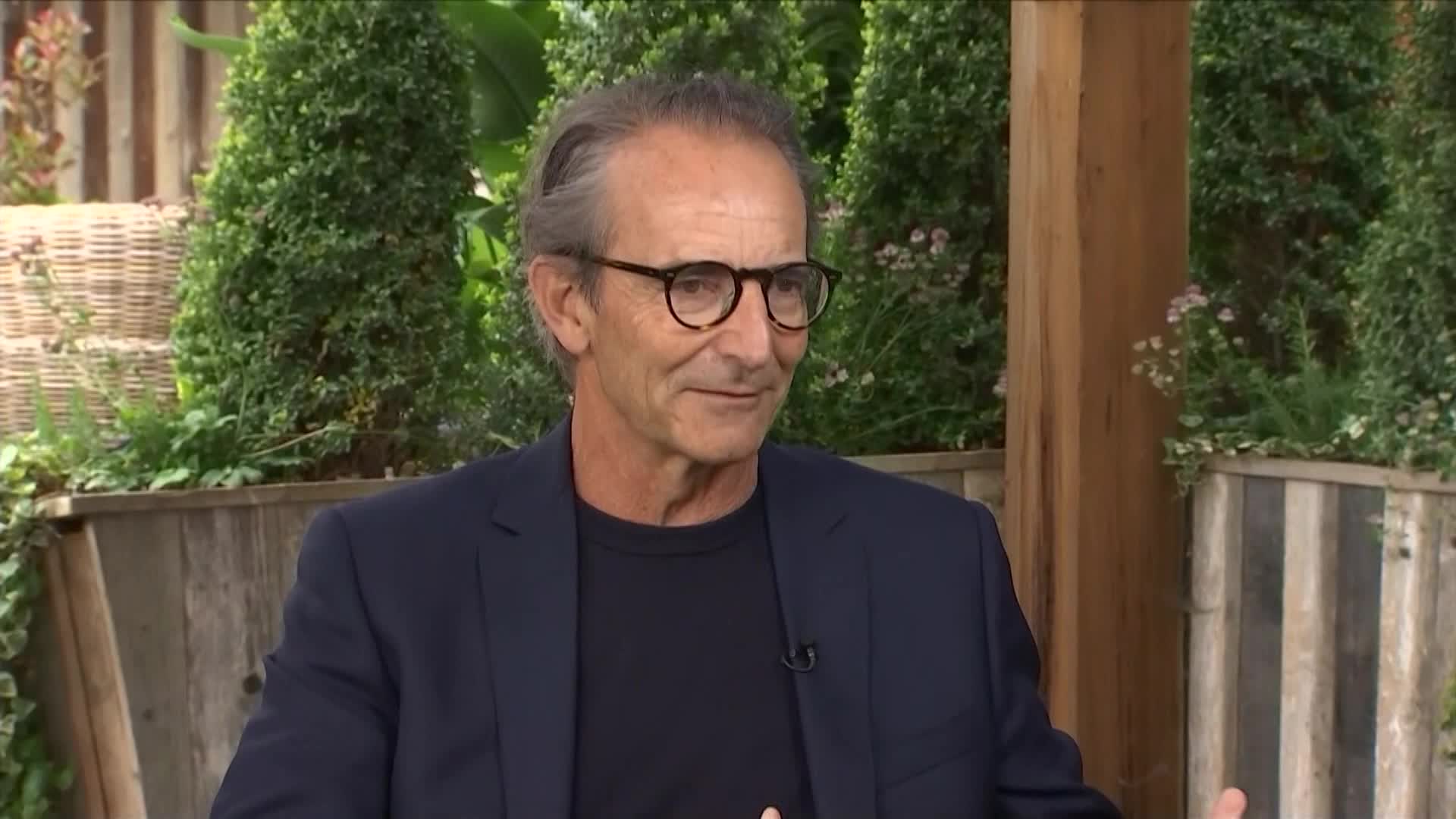

"Pictures are easily manipulated; that idea has been there," James Cohen, a media professor and expert on internet literacy at CUNY Queens College, told Politico.

"But when it comes to videos, we’ve just been trained as an individual society to believe videos. Up until recently, we haven’t really had the opportunity to assume videos could be faked at the scale that it’s being faked at this point.”

Ammunition for the culture war

Most of the videos seen by The Independent were evidently targeted at a conservative audience, designed to reinforce or reference right-wing talking points. At least one TikTok account, however, with more than 300,000 views on its videos as of Wednesday evening, was evidently aimed at progressives interested in stirring messages of solidarity with immigrants.

Some are obviously jokes; others, ambiguously jokes. Often there is a tag indicating that they the video is AI-generated. But in most cases this crucial information is easily missed.

Unfortunately, the problem is only likely to get worse in future. Republicans' flagship "Big Beautiful Bill" includes a moratorium on all state regulation of AI, which would prevent any state government from intervening for 10 years.

While AI-generated videos can be difficult to tell from the real thing, there are ways. They're often suspiciously clean and glossy-looking, as if hailing from the same manicured universe as Kendall Jenner's infamous Pepsi protest advert. The people in them are often strangely beautiful, like the amalgamation of a million magazine photoshoots.

The Better Business Bureau also recommends scrutinizing key details such as fingers and coat buttons, which often don't make sense on close inspection. Writing, too, is frequently blurred and illegible: just a jumble of letters or letter-like forms.

Background figures may behave strangely or repetitively, or even move in ways that are physically impossible. If in doubt, Google it and see if any trustworthy media organizations or individual journalists have confirmed or debunked what you're seeing.

Most of all, be on the lookout for anything that seems to perfectly confirm your pre-existing beliefs. It may just be too good to be true.

English (US) ·

English (US) ·